Prompt Engineering IDEs: VS Code for AI

Everything you need to know about Integrated Development Environments (IDEs) for Prompt Engineering and AI Development

Published on June 6, 2023 by

Toni Engelhardt and GPT-4

Introduction

With the release of OpenAI's ChatGTP in November 2022, we have entered a new era of work and product development. Thanks to the superhuman capabilities of Large Language Models (LLMs), many digital text-based routine tasks can now be automated.

A recent study by Tyna Eloundou et al. found that around 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of LLMs.

Just ask the algorithm to do it, right?

Well, not so fast!

Recent language models can indeed take many laborious tasks off our hands, but we need to ask the right questions, and that is why the field of Prompt Engineering is such a hot topic right now.

Prompt Engineering

The art of talking to machines. Let's take a look.

What is Prompt Engineering?

Prompt Engineering is the process of designing effective prompts – wrt. cost and quality – to obtain desired outputs from an LLM. In this post we won't concern ourselves with the technical details, you can find those, as well as all the different Prompt Engineering domains, in the related Wiki article.

Let's talk about tasks first.

Which tasks can be automated with LLMs?

Before we start optimizing prompts, let's take a look at the following two distinct types of tasks to see whether our time is well spent or not:

- One-time tasks

- Repetitive tasks

One-time tasks

Examples

- Retrieving specific information from the internet, e.g. "What happened in 1971?"

- Editing documents, e.g. "help me complete this post section"

One-time tasks refer to standalone queries or questions where we need an answer only once. These tasks are often best suited for conversational or chat-based interfaces (e.g. the ChatGPT app), where the user can engage in an interactive exchange with the LLM. The primary goal is to guide the model to provide the desired information by posing questions judiciously.

Question and response accuracy are not the primary concern for one-time tasks as the user can ask follow-up questions and request clarifications to improve the outputs. We may or may not call this interactive approach Prompt Engineering, but it requires some practice, skill, and creativity to get useful results for the task at hand. Note that this might get easier as the models get better.

While not suitable for automation, conversational mode unlocks the full potential of LLMs.

Repetitive tasks

Examples

- Improve copy based on certain criteria, e.g. "rewrite these emails in formal language"

- Extract information from incoming documents, e.g. "summarize this list of complaints"

- Classify user-generated items, e.g. "determine the sentiment of these reviews"

Repetitive tasks are executed more than once, either periodically or on demand. These tasks require the model to consistently generate a useful output in a single interaction to be viable. Hence, accuracy and precision are paramount factors when designing prompts for task automation. Crafting these high-precision prompts is what we usually refer to when we speak of Prompt Engineering and the process has a much higher difficulty level than chat mode, which is why tooling is more important here.

In the following, we'll look deeper into Prompt Engineering for repetitive tasks.

While certainly more limited in scope, static prompts that only require a single request to an AI model unlock the potential for automating expensive digital processes and therefore have a huge economic value when deployed appropriately.

But how to develop such valuable prompts?

Prompt Engineering IDEs

IDE stands for Integrated Development Environment, a term originally coined in modern software engineering. Since there are so many parallels between software engineering and prompt engineering, it makes a lot of sense to simply adopt the terminology. In fact, both of these fields refer to the development of instructions for microprocessors, only that the latter has a higher level of abstraction (or compilation).

What is a Prompt Engineering IDE?

Engineering reliable prompts for deployment in apps, integrations, and automated workflows demand a lot of experimentation and fine-tuning. Simple testing environments like the OpenAI playground are great to get started, but they are not sufficient for advanced use. For that, we need a more sophisticated tool stack. Think VS Code for software engineering or Bloomberg Terminal for stock trading.

Definition by ChatGPT:

A prompt IDE (Integrated Development Environment) is a software tool that provides a user-friendly interface for creating and running prompts to interact with language models like ChatGPT. It assists users in experimenting with different prompts and receiving responses from the language model in real time, making it easier to fine-tune and test various inputs and outputs.

Why you should use a Prompt Engineering IDE

Imagine telling a software engineer to develop the code for an app or website with Microsoft Word. Spoiler: they'd laugh you out of the room...

Why, code is just text? Well, because MS Word doesn't have the right tooling that makes writing code fun and efficient. Likewise, if you go to the OpenAI playground, it's pretty basic. You get a text input and some sliders to adjust model parameters, but that's it. If you try to construct even moderately complex prompts you'll be lost before you even get started.

Proper tooling for prompt design

So what do we need? Which features would the ideal prompt engineering IDE need to have? This is of course dependent on the specific project, but here's a list of the features that I think would generally be nice to have:

- composability

Oftentimes you want to reuse instructions, experiment with distinct versions of a paragraph, or re-arrange sections via drag and drop. - traceability

When playing around with different variations of a prompt, you want to keep track of which exact prompt generated which output. - input variables

When testing prompts for real-world scenarios, it's handy if you can use variables. For instance, if you have a prompt that uses a person's name in multiple places, it's convenient the define the name as a variable. That way you can easily run the prompt for different names without having to replace the name everywhere it is used. - prompt library

A central place where you can store all your prompts and organize them in projects and folders. - version control system

Just like with code (and git), you want to be able to track changes to your prompts and revert to previous versions if necessary. - test data management system

Once the prompt is ready, you want to test its performance on a diverse set of real-world input data to make sure that it is robust. - prompt deployment pipeline

This goes a step further, but ideally, once you have a prompt that works, would it not be nice to directly use it in your apps, integrations, and workflows without having to export and integrate it? Prompt endpoints (AIPIs) make this possible (see below).

Access to multiple AI platforms and LLMs

Aside from the above-mentioned functionality, maybe the most important one at all is the ability to test your prompts with LLMs from different AI platforms (OpenAI, Bard, Anthropic, Cohere, Hugging Face, Replicate, etc.), without having to copy-paste them manually into different playgrounds. The IDE should be able to interface with all relevant providers and allow you to compare outputs side by side.

You can read more about this topic in the "Stripe for AI: One Platform that Connects to all LLMs" post.

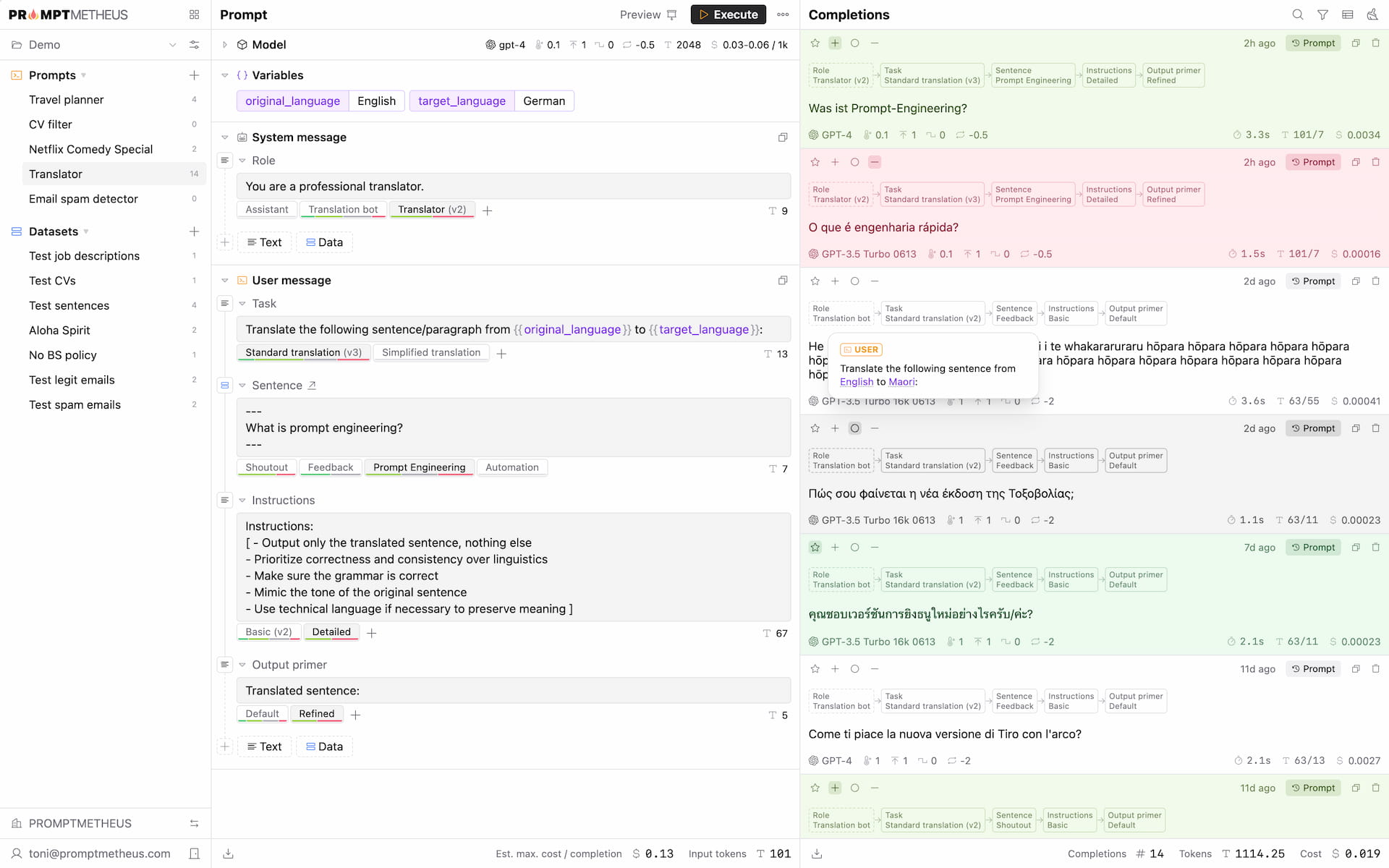

Introducing PROMPTMETHEUS

Despite high demand, there are no established IDE solutions on the market yet – the entire discipline of Prompt Engineering is still in its infancy. That's why PROMPTMETHEUS came to life, an attempt to fill this gap.

PROMPTMETHEUS is designed from the ground up to make it easy to compose, test, optimize, and deploy complex prompts to supercharge your apps, integrations, and workflows with the mighty capabilities of Artificial Intelligence.

The FORGE playground (free) has a modular approach to prompt design and allows you to compose prompts by combining different text- and data blocks like Lego bricks (composability). The app keeps automatically track of the entire design process and provides full traceability for how each output was generated and statistics on how each block performs. This way, you can playfully experiment with different prompt configurations and quickly find the best solution for your use case, without having to worry about losing track of your work.

You can also compare outputs from different AI platforms and fine-tune model parameters (Temperature, Top P, Frequency Penalty, Presence Penalty, Stop Sequences, System Message, ...) to optimize the results.

Once you have a prompt ready and tested, you can either export it in a variety of formats (.txt, .xls, .json, etc.) or directly deploy it to a bespoke AIPI endpoint or UI interface, where you and your apps and services can conveniently interact with it.

FAQ

What is an AIPI?

An AIPI (AI Programming Interface) is similar to a conventional API, but instead of communicating with an application its endpoints mediate interactions with an AI model.

Get started with the FORGE playground or take a look at this demo that showcases how to use PROMPTMETHEUS to craft a prompt that can extract emotions from a journal entry:

Thanks for reading and stay tuned for exciting updates in the future!